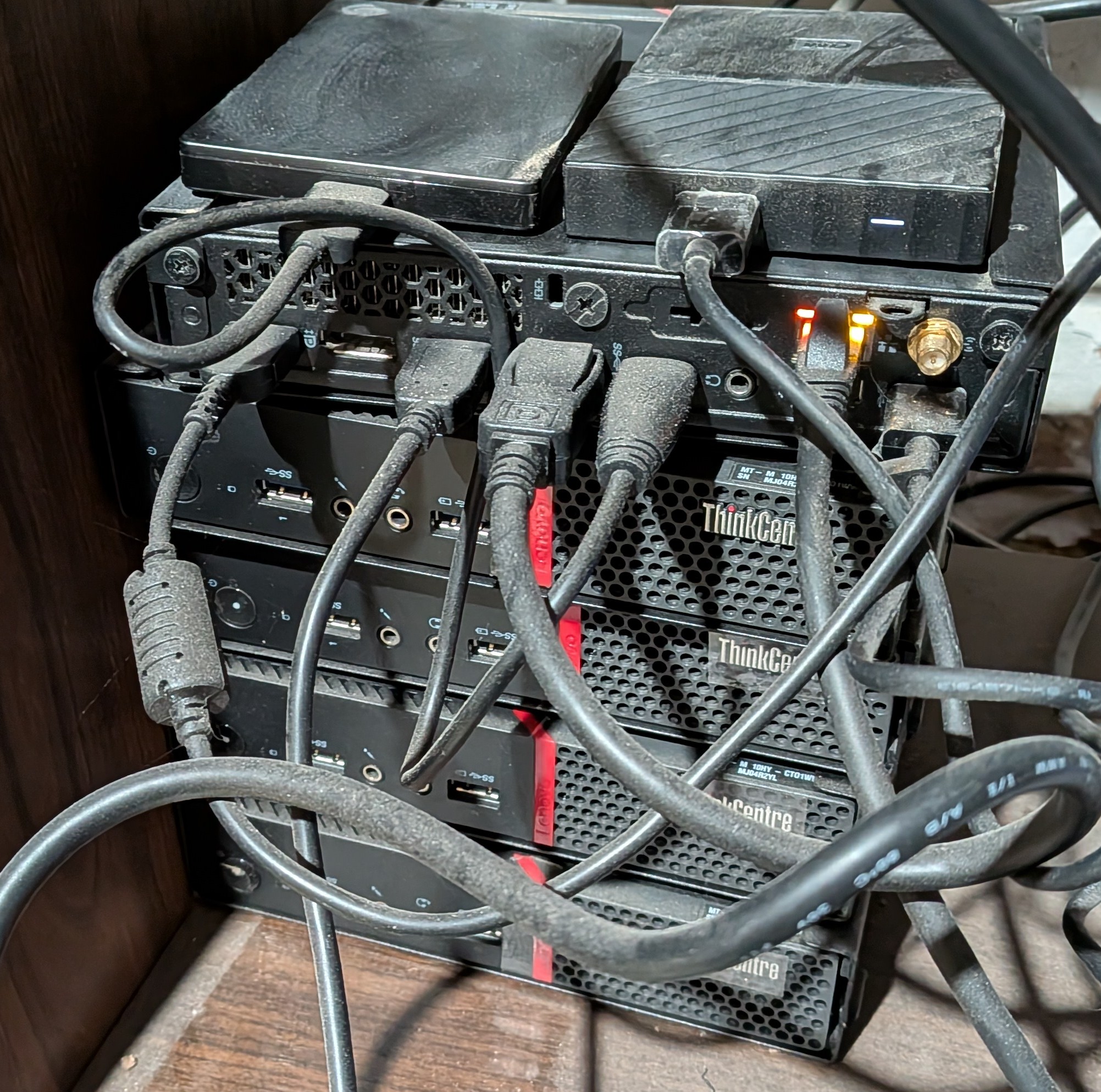

Using the llama-cpp inference runtime with smaller GGUF transformer models on old dusty hardware.

git clone https://github.com/ggerganov/llama.cpp

cd llama.cpp

Build without GPU

mkdir build

cd build

cmake .. -DLLAMA_CUBLAS=OFF

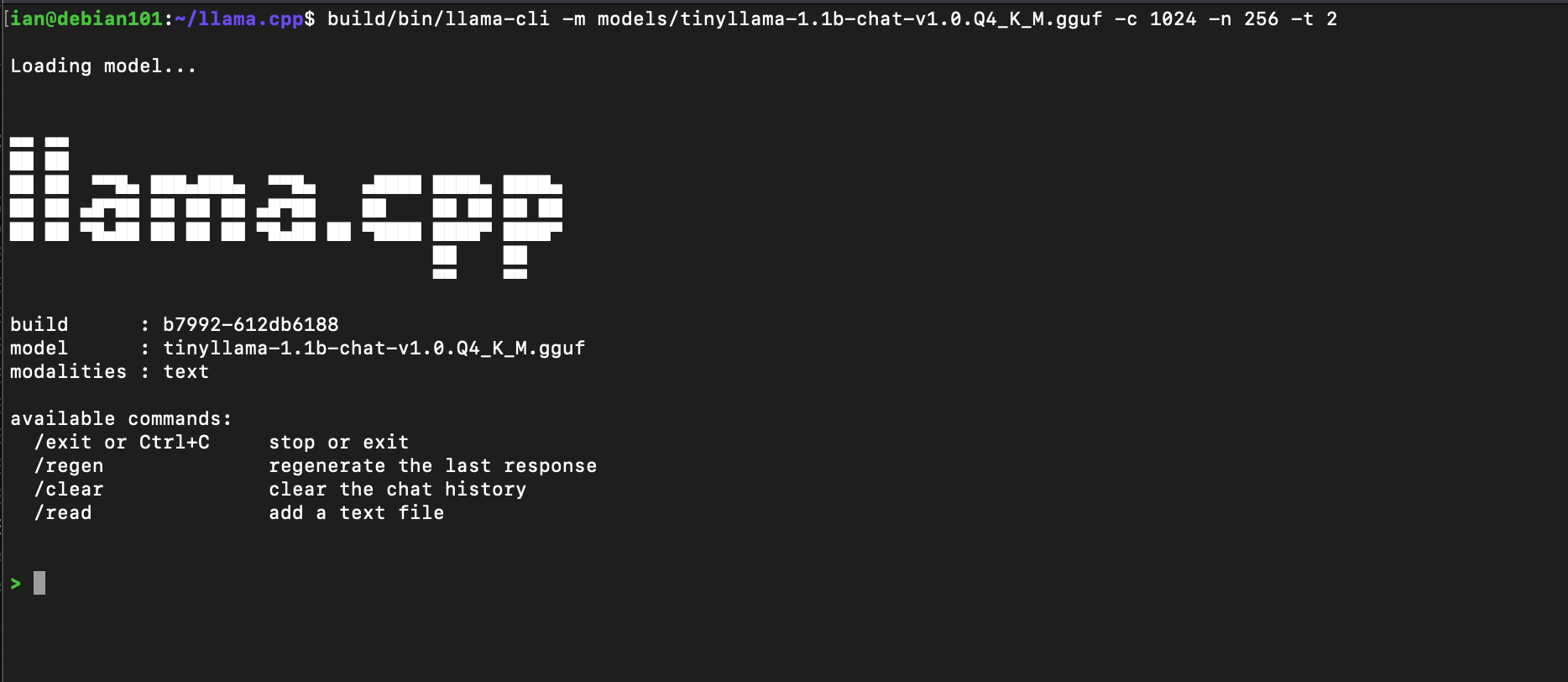

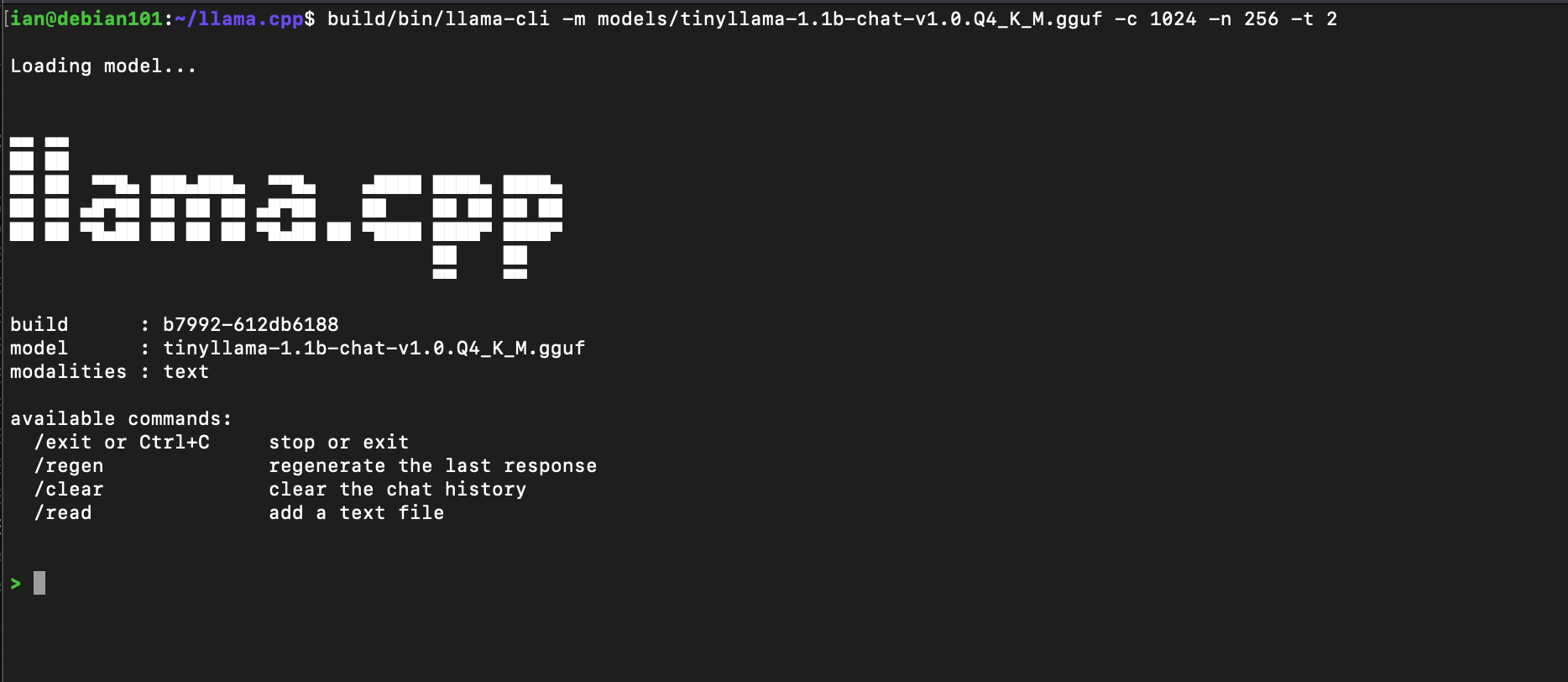

./build/bin/llama-cli \

-m models/tinyllama-1.1b-chat-v1.0.Q4_K_M.gguf \

-c 1024 \

-n 256 \

-t 2

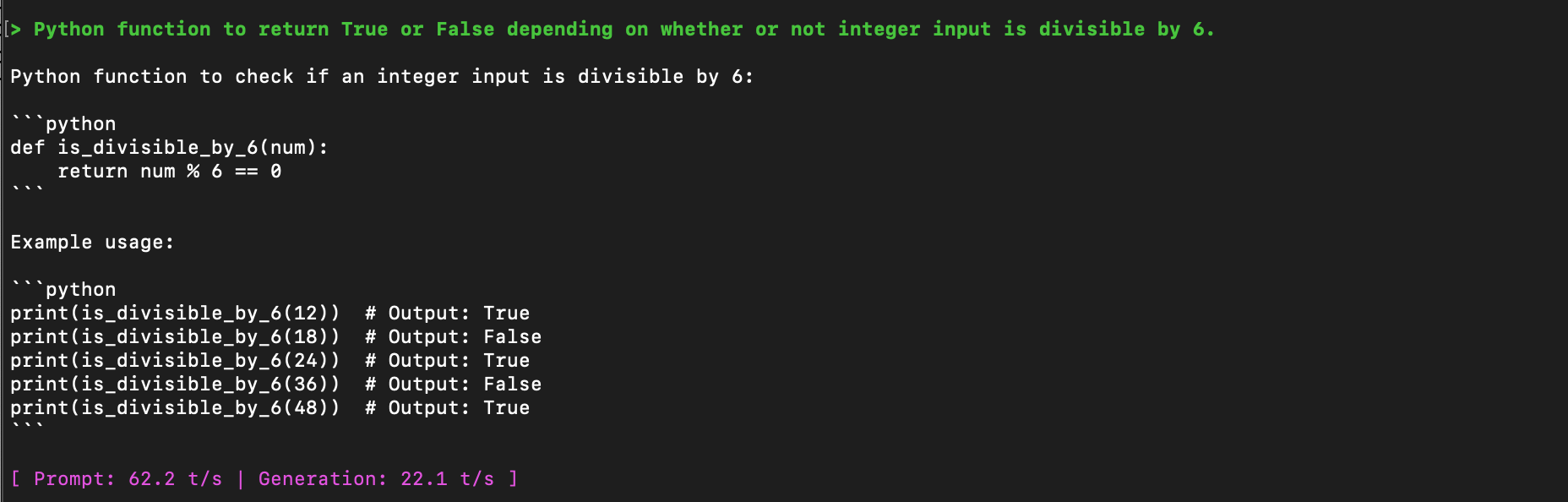

Function works but examples are wrong.